Experimentation-powered Marketing Science Agent

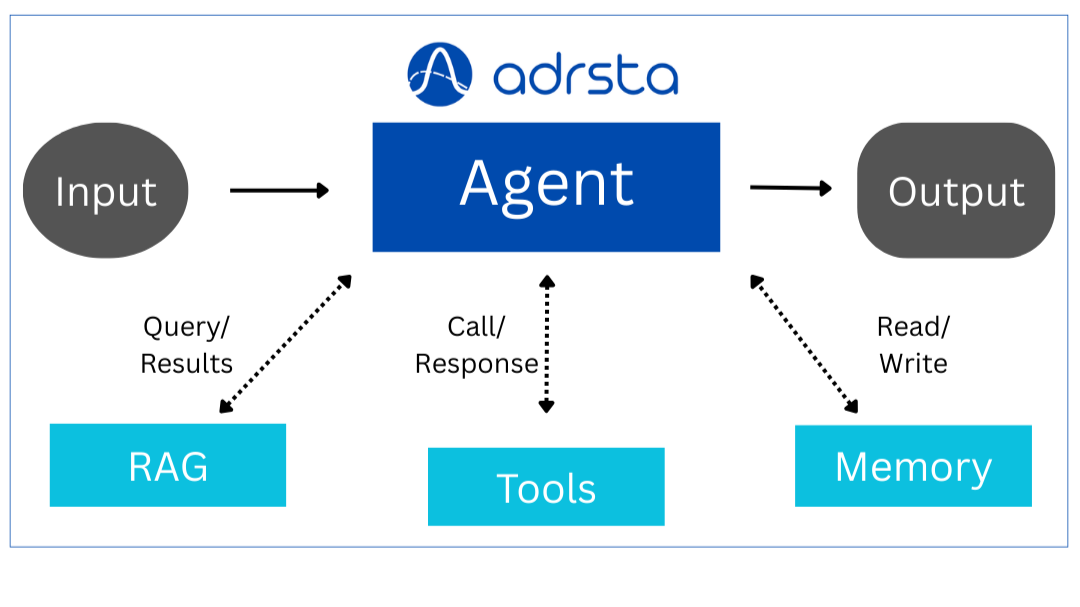

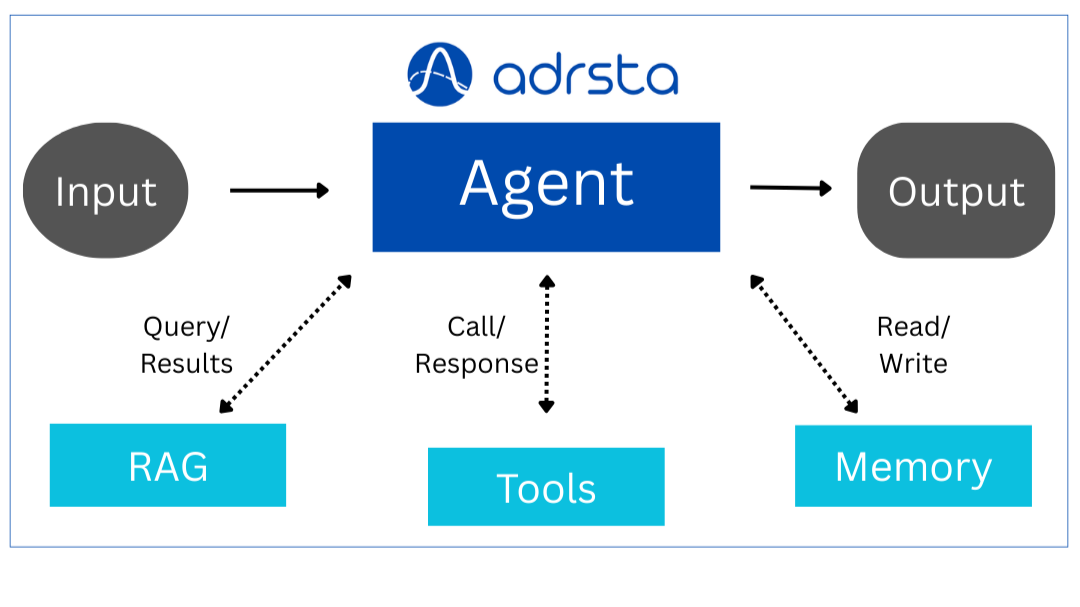

Most marketing measurement tools tell you what happened after your budget is already spent. At Adrsta, we're building something different: AI agents that don't just analyze—they act. The core question we're exploring: What if analytics didn't stop at insights, but directly powered autonomous agents that execute decisions?

The Problem with Traditional Attribution and Measurement

Today's marketing measurement follows a broken workflow:

- Analytics → Generate insights about what worked

- Humans → Interpret data and plan changes

- Manual execution → Implement budget shifts weeks later

- Repeat → Start over next quarter

By the time you act on insights, market conditions have changed and opportunities are gone.

Agent #1: Autonomous Marketing Mix Modeling: Instead of MMM dashboards that show "TV drove 30% of conversions," imagine an agent that automatically reallocates your TV budget to higher-performing channels.

How it works:

- Ingests real-time spend, sales, and seasonality data

- Runs machine learning models to calculate true channel contribution

- Agent layer consumes these insights and programmatically shifts budgets

- Agent layer consumes these insights and programmatically shifts budgets

- Built-in guardrails ensure changes stay within ROI floors and spend caps

- Learns from past decisions to improve future allocations

The result: Insight → Agent Decision → Platform Action → Continuous Learning

Agent #2: Autonomous Bidding Optimization: Traditional bid management follows simple rules: "increase bids if ROAS drops." Our bidding agent thinks strategically about auction dynamics and competitor behavior.

How it works:

- Core bidding engine predicts click-through rates and adjusts bids for maximum ROI

- Competition simulator tests strategies against different bidder behaviors

- Strategy planner uses past auction data to suggest winning approaches in complex scenarios

The result: Self-learning bidding agents that don't just follow rules—they adapt, simulate, and strategize like experienced traders.

Agent #3: Autonomous Experimentation: Most geo-lift experiments happen once or twice per year. Our experimentation agent runs continuous micro-tests to identify what's actually driving incremental results.

- Core bidding engine predicts click-through rates and adjusts bids for maximum ROI

- Competition simulator tests strategies against different bidder behaviors

- Strategy planner uses past auction data to suggest winning approaches in complex scenarios

How it works:

- Experiment designer automatically selects test vs. control geographies

- Synthetic control engine runs real-time causal inference against dynamic baselines

- Decision agent interprets results and recommends scaling, reallocation, or shutdown

- Learning system improves test design based on past experiment outcomes

The result: Continuous experimentation that generates insights weekly, not quarterly.

Why This Matters

These aren't three separate tools—they're interconnected agents that make each other smarter:

- MMM insights inform bidding constraints

- Experimentation results update attribution models

- Competitive intelligence feeds strategic planning

- Each agent's learning improves the entire system